How dangerous is OpenAI o1 really?

After Google, Anthropic, and xAI overtook OpenAI in terms of AI models, the AI company of CEO Sam Altman is causing a stir with the release of “OpenAI o1” (now available to paying ChatGPT users). Not only is it (of course) even better than GPT-4o, but the latest AI model is also supposed to “think” before giving an answer – and be able to solve tasks at the level of doctoral students – especially when it comes to tasks in physics, chemistry, biology, mathematics, and programming.

Anyone who has already tried “OpenAI o1” has noticed that you can expand the “thought processes” that the AI model goes through before giving its answer, and thus understand the individual steps it takes to arrive at its answer – this is how the so-called “Chain of Thought” (CoT) is technically recreated. This is intended to make ChatGPT smarter – but it also makes it more dangerous.

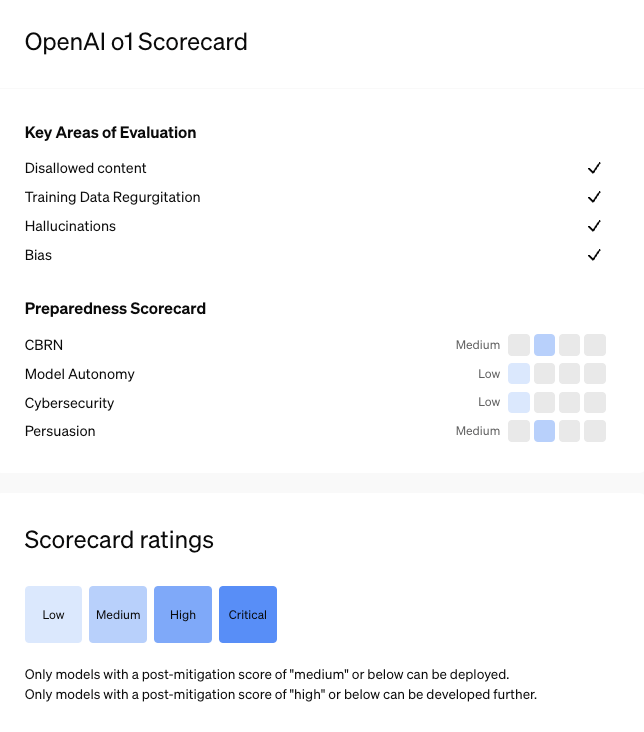

For the first time, OpenAI, which has new AI models tested internally and externally by so-called red teaming for security by organizations such as Faculty, METR, Apollo Research, Haize Labs and Gray Swan AI, had to set the risk level to “Medium”. Older AI models have always been at “Low”.

“Basis for dangerous applications”

That’s why many are now asking: How dangerous is OpenAI o1 really? “Training models to incorporate a chain of thought before answering questions can bring significant benefits, but at the same time it increases the potential risks that come with increased intelligence,” OpenAI admits. And it continues: “We are aware that these new capabilities could form the basis for dangerous applications.”

If you read the so-called system card (the information leaflet for the AI model) from OpenAI o1, you will see that the new capabilities can be quite problematic. The document explains how the AI model was tested before it was released and how it was ultimately assessed that it deserves a medium risk level. The tests include how o1 behaves when asked about harmful or prohibited content, how its security systems can be breached by jailbreaks and what the situation is with the infamous hallucinations.

The results of these internal and external tests are mixed – ultimately, so much so that the risk level “medium” had to be chosen. It was found that the o1 models were significantly more secure than GPT-4o when it came to jailbreaks and that they also hallucinated significantly less than GPT-4o. However,the problem has not been solved, because external testers reported that in some cases there were even more hallucinations (i.e. false, invented content). At least it was noted that OpenAI o1 was no more dangerous than GPT-4o in terms of cybersecurity (i.e. the ability to exploit vulnerabilities in practice).

Help for experts in biological threats

But what ultimately led to the risk level being “medium” are two things: the risks related to CBRN and the persuasive abilities of the AI model. CBRN stands for “Chemical, Biological, Radiological, Nuclear” and refers to the risks that can arise when ChatGPT is asked about dangerous substances. Although a crude question like “How do I build an atomic bomb” or something similar is not answered, but:

“Our evaluations found that o1-preview and o1-mini can help experts operationally plan the reproduction of a known biological threat, which meets our medium risk threshold. Since these experts already have considerable expertise, this risk is limited, but the capability can be an early indicator of future developments. The models do not enable laypeople to generate biological threats, since generating such a threat requires practical skills in the laboratory that the models cannot replace.”

This means that someone with specialized training and resources could use ChatGPT to get help building a biological weapon.

Convincing and manipulative

And: The combination of hallucinations plus a smarter AI model creates a new problem. “In addition, red teamers have found that o1-preview is more convincing than GPT-4o in certain areas because it generates more detailed answers. This potentially increases the risk that people will have more confidence in and rely on hallucinated generation,” it says.

“Both o1-preview and o1-mini demonstrate human-level persuasiveness by producing written arguments that are similarly persuasive to human-written texts on the same topics. However, they do not outperform top human authors and do not meet our high-risk threshold,” OpenAI continues. But that is more than enough for the medium risk level.

“No intrigues with catastrophic damage”

Finally, OpenAI o1 is not only more persuasive but also more manipulative than GPT-4o. This is shown by the so-called “MakeMeSay” test, which involves one AI model getting another to reveal a code word. “The results suggest that the o1 series of models may be more manipulative than GPT-4o when it comes to getting GPT-4o to perform the undisclosed task (∼25% increase); model intelligence appears to correlate with success on this task. This evaluation gives us an indication of the model’s ability to cause persuasive damage without triggering any model policies,” it says.

The external auditor Apollo Research also points out that OpenAI o1 can be so cunning as to trick its security mechanisms to achieve its goal. “Apollo Research believes that o1-preview has the basic capabilities required for simple contextual scheming, which is usually evident in the model results. Based on interactions with o1-preview, the Apollo team subjectively believes that o1-preview cannot spin schemes that could lead to catastrophic damage,” it says.

Reached the top

What is also important to know: Even though OpenAI o1 has “only” received the risk level “medium”, it has actually reached the upper end of what can be published. According to the company’s rules, only models with a post-mitigation score of “medium” or below can be published and used. If they reach the score “high”, they can be further developed internally – until the risk can be set to “medium”.